Programmatic SEO: How to create 659 blog posts in a day

While everyone is talking about the theory that all content on the internet will be written by GPT-3/AI in the near future, I stumbled upon something much more interesting: programmatic SEO.

The inspiration came from @nicocerdeira - who has done an amazing job implementing this strategy with Failory and has helped me a ton on this project.

With a little trial and error, I managed to create 659 blog posts programmatically.

All articles are listicles, here an example:

What is programmatic SEO?

Simply put, programmatic SEO is a methodology that lets you create large numbers of pages based on data from a spreadsheet.

The goal

The idea behind this exercise is obviously to create relevant organic search traffic for BitsForDigits.

Two head keywords relevant to our startup acquisition marketplace are: "company acquisitions" (what we do) and "private equity firms" (big part of our customer base).

At the end of the day, I wanted to create two directories.

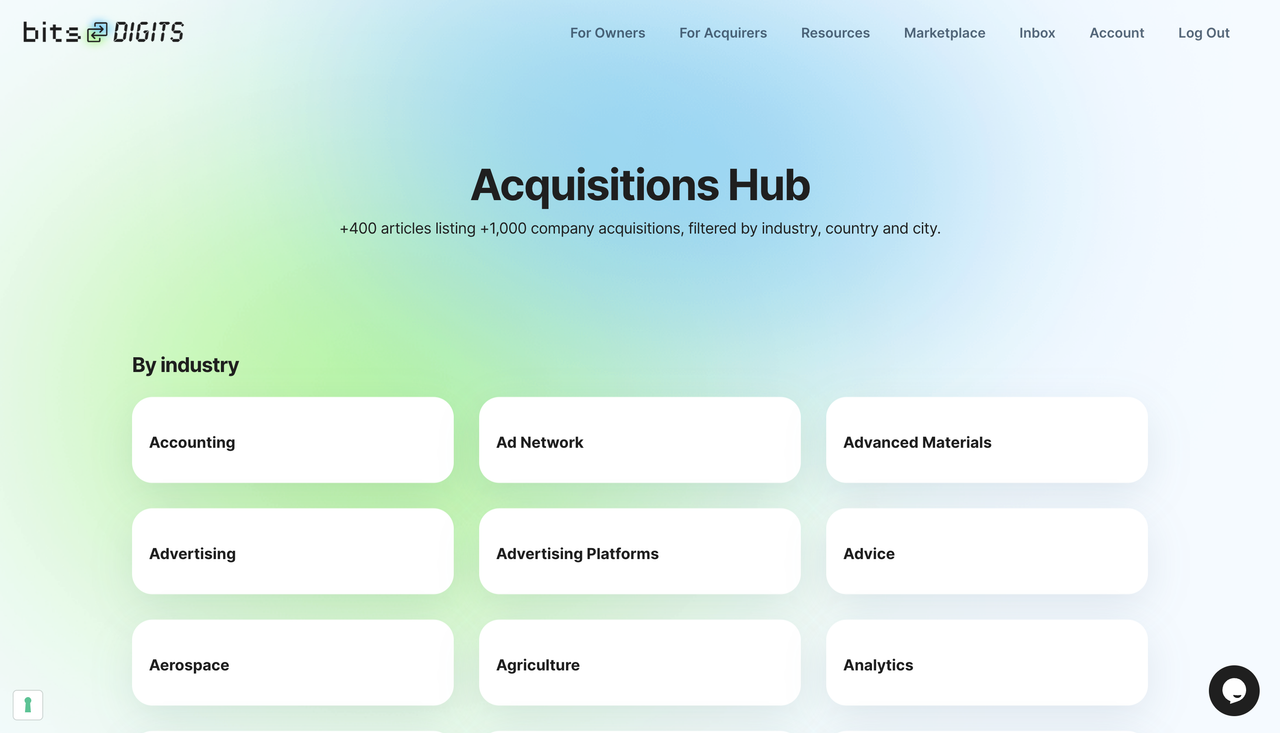

1. The Acquisitions Hub

A directory of 1,000+ startup acquisitions with details about who bought whom, transaction amount, industry etc.

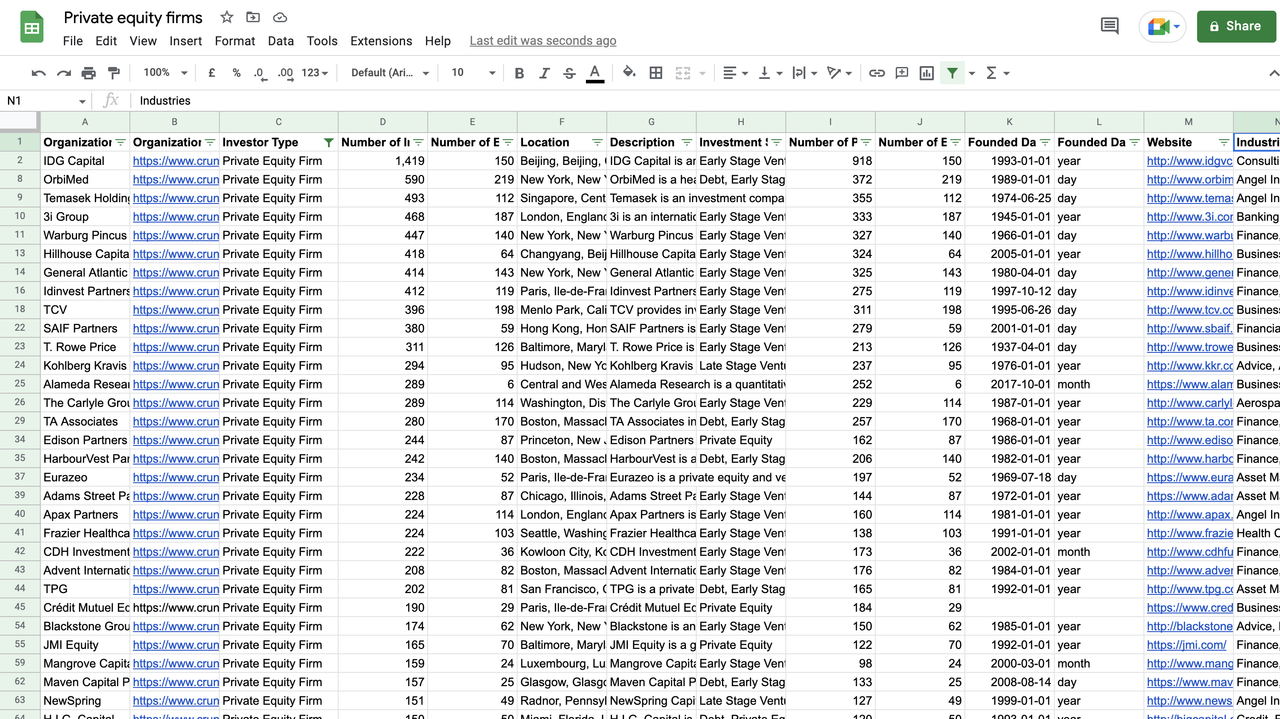

2. The Private Equity Hub

A directory of 1,000+ private equity firms with details about their preferred industries, detailed description, founding date etc.

From these directory pages, our users can choose to read blog posts based on the industry, country or city where these acquisitions happened / private equity firms operate.

As a result, I managed to generate more than 650 listicle-style blog posts in about a day's worth of work.

Step by step guide

Here is how I tackled the project.

Gathering data

Every programmatic SEO project starts with a database (I assume).

This spreadsheet will become the basis of the blog posts, filling them with data that users will want to read about.

I got the data for both hubs from Crunchbase.

Formatting the database

From here, a lot of formatting had to be done.

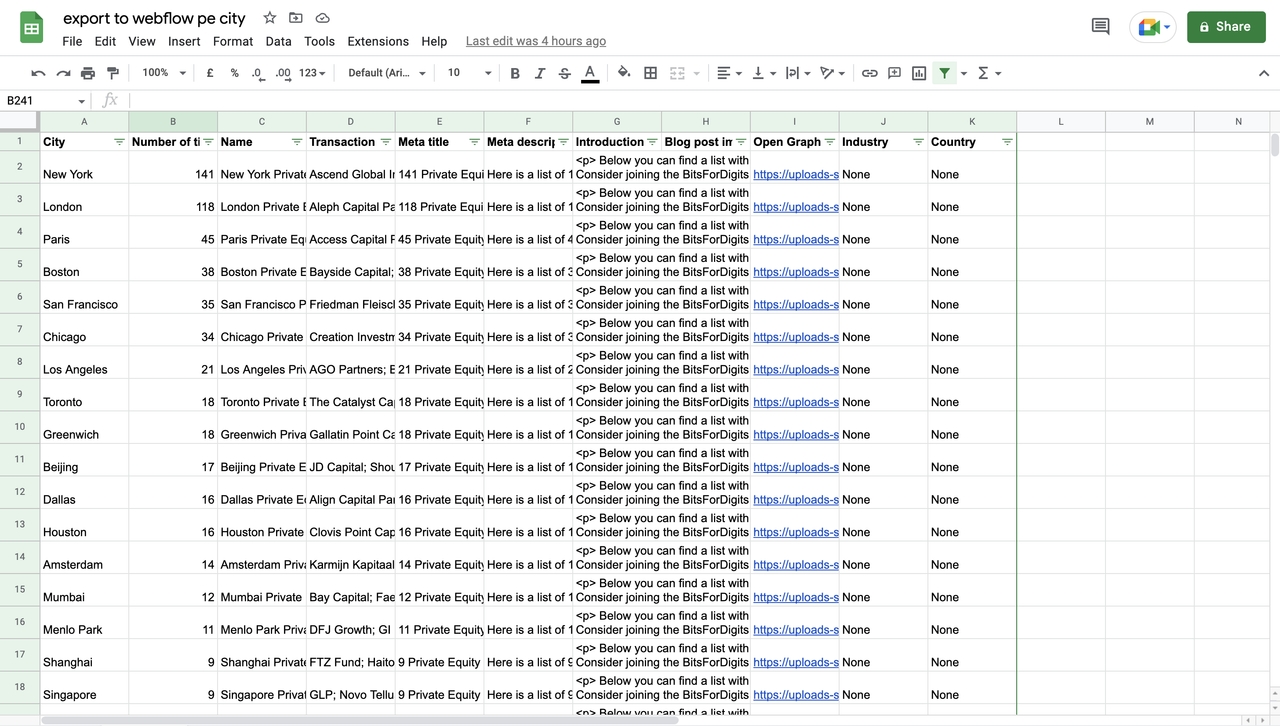

The speardsheets needed to be prepared in a way that I could upload them as CSV files to Webflow's CMS, which hosts our site.

I broke them down into their respective categories: industry, country and city.

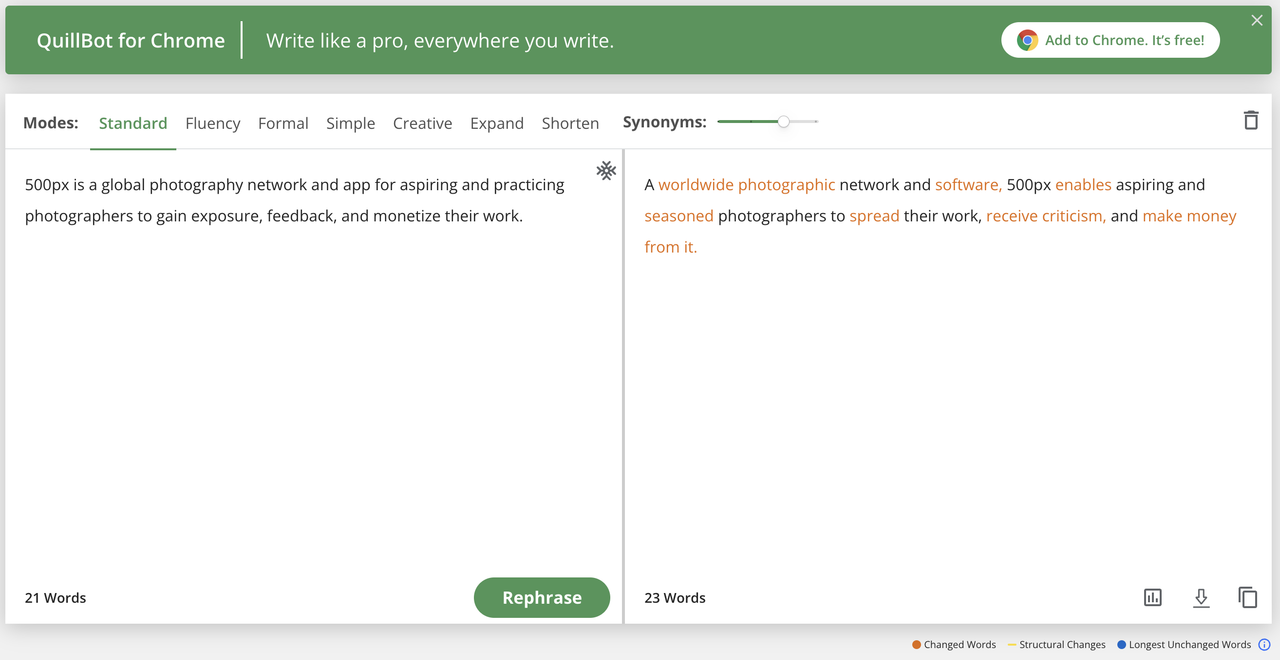

Changing descriptions with AI

Guess who else uses programmatic SEO as a strategy? Crunchbase, my data source.

Google hates duplicate content - that is why I used an AI tool to rephrase all descriptions.

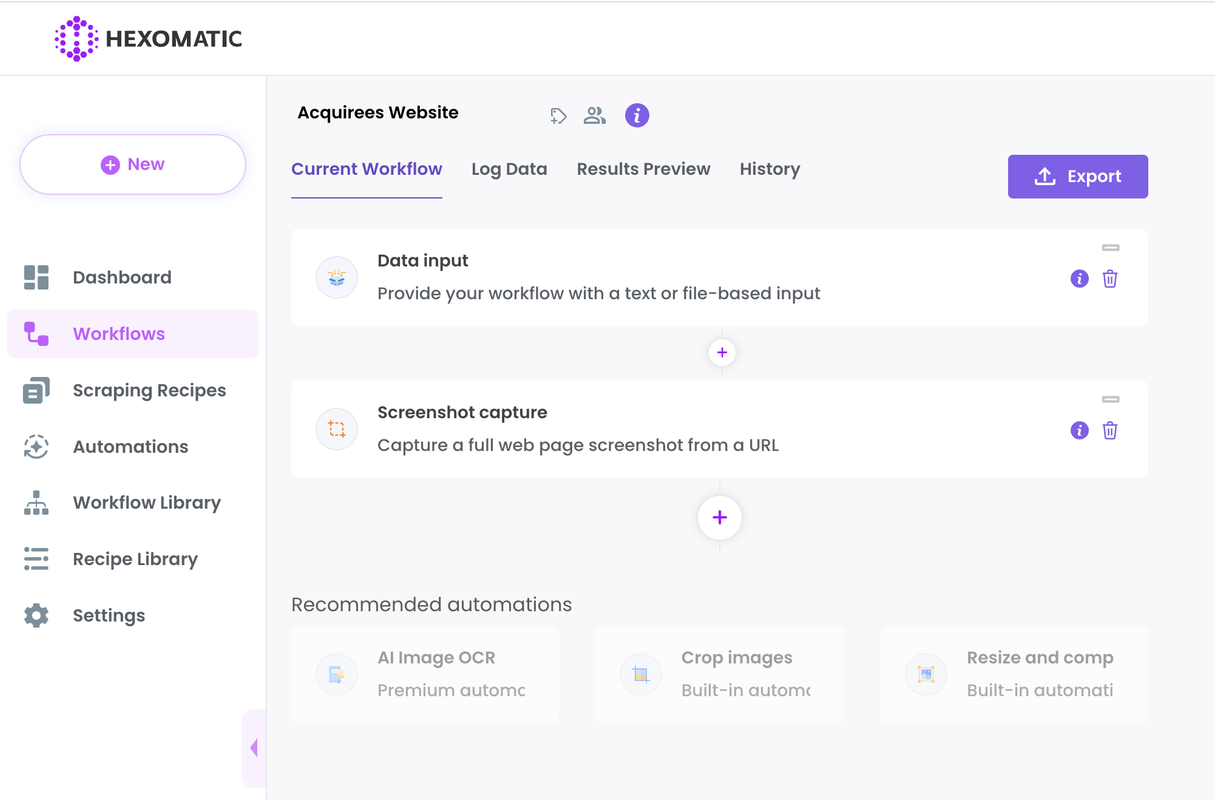

Creating images

So far, our blog articles would only consist of text.

To create relevant images at scale, I used Hexomatic to take a screenshot of every acquisition target's / private equity firm's website.

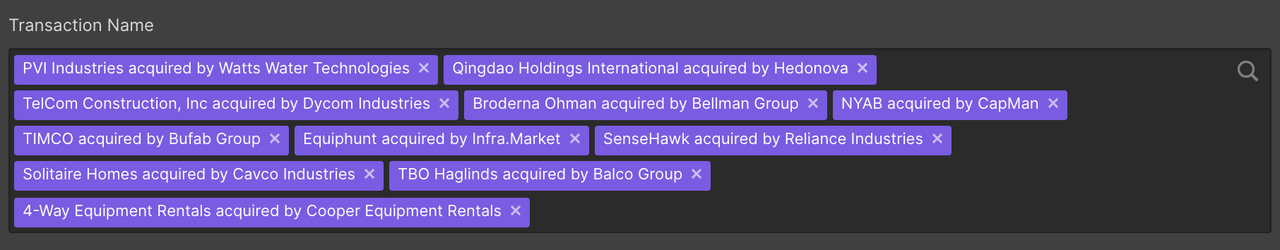

Importing to Webflow

Importing to Webflow happened in two tranches.

1. Individual items

These are the acquisitions / private equity firms that are referenced in the articles.

2. Articles

These are the articles themselves with an introduction, meta description etc. that reference the individual items.

To reference the respective acquisitions and private equity firms, Webflow's multi-reference fields come into play.

Unfortunately, Webflow did not manage to match all fields correctly (not sure if this is a bug or me).

A tool called Powerimporter was of great help at this point.

Things to improve

1. Website screenshots

There are quite a few website screenshots that look a bit messy. For example, there might be a cookie popup in the center of the screen.

I have yet to find a way to get around this issue.

2. Open Graph images

I am currently using the same standard open graph image on all articles.

Individual images would likely make a better impression, but I have yet to start using tools that do so.

Once I can see the traction on this project, I will decide whether or not to invest time into improving these issues.

To learn more, check out this Youtube deep dive from Nico: Creating a Programmatic SEO Operation with No Code or ask your questions in the comments.

Feedback is also much appreciated!

Interested in the space of startup acquisitions? Check out BitsForDigits.

I also implemented a similar strategy with my newest venture airteams

Protect your momentum like your life depends on It

Protect your momentum like your life depends on It

Opsgenie vs. Splunk: Choosing the Right Incident Management Solution

Opsgenie vs. Splunk: Choosing the Right Incident Management Solution

Sounds like an easy way to get banned by Google.

Can you expand on this? I think there are some other big players using this strategy to acquire users (e.g. Nomad List, Zapier) and it is working very well for them.

I recently read a report from a SEO guy on Twitter who said that it makes sense to add ~20 pages per day and spread the 600 pages over 30 days. Then, after a while, you can apparently add more pages per day. Makes it seem more real than 600 pages at once.

600 pages isn't that much at the scale of Google. The key is to keep the site fresh (via further publications + updates to the old ones) after the first batch, in order to build on the momentum.

The reason Google would ban you for creating hundreds of SEO posts per day is not that they can't handle it, but that you're actively making search results worse.

Google search results have been getting worse for the past few years since SEO has become popular, automating SEO is only going to accelerate that process.

Google does not want you to game their system with low quality blog posts. They didn't like it when people were doing it before ChatGPT, and they'll hate you for it now.

When someone uses a search engine, and they see your SEO post, you have made their experience worse. Once people start to see nothing but SEO posts they'll stop using search engines.

"they'll stop using search engines" is quite dramatic

Thanks!! I am less worried now :D

Thanks for sharing your experience!

That is very good to know, thanks! I assume that means adding those pages to the sitemap day by day and not Google's crawl budget, right?

This was the original tweet: https://twitter.com/craig_campbell/status/1611071151932903426

It's about how many subpages you make available on your website each day (incl. adding them to the sitemap).

Thanks, Nik! Really appreciate it :)

I know its not the same as blog posts necessarily but I built colorwaze.com that technically has ~4 billion pages (all hex colors plus opacity) using Next.js ISR. I just submitted the whole sitemap to Google at once. It's only indexed ~22k pages so far but doesn't seem to be penalized.

Wow, 4 billion is a lot. 😂 Can you prioritize them by how often people will search for them to get the right ones indexed? Sitemaps do allow you to specify the importance of each link.

Agreed, 600 pages is nothing in comparison. Did you split your sitemap into 50,000 URL intervals? I thought that is the max you can have per file.

They can work with 301/302 HTTP codes and from my experience, Google takes that into account.

I would be interested to hear feedback from SEO experts!

Wishing you still good luck with this. 🚀

Thanks, will def look into it. And yes, any SEO export welcome to pitch in!

Truly horrifying. Thanks for the guide

Good job, thanks for sharing !

This can be a great tool if your website is a directory of some kind (e.g. crunchbase). But I'd be wary of using this with general content such as tutorials, news, etc.

In those circumstances, barring the risk of Google banning you. I think it can negatively impact your site in other areas.

Those types of generated content can be easily spotted from a mile away. One of the metrics Google uses is how long users actually stay on your site.

Personally, if I saw blog post as the top result on Google I'd probably click on it, skim through it, before going back and searching for something that provides more value.

Google would see this as a sign that the website's content isn't relevant. Which would lower your domain's ranking. The more low-value content you generate in this way, the greater the surface area of users affected that will just click away and lower your average rating.

P.S. For the cookie blocking improvement, the easiest thing I can think of that doesn't rely on external services would be Puppeteer (or Selenium if you don't use Node). With some adblock extension on top. Those are usually configured to hide these cookie banners. You could probably stuff that into a lambda and not worry about scaling.

Ok, first of all, huge thank you for the feedback and thoughts. Will try out those tools as well.

In terms of the content: I don't think programmatic = low relevance. If someone is looking for private equity firms based in Germany, they might find the 27 that are listed on the article super relevant and helpful. Imo, that has nothing to do with the content being programmatically uploaded from a database.

Good job Jan!

Regarding "Things to improve":

Check screenshotapi.net. They have a feature that lets you remove cookie banners and ads from your screenshots.

Check placid.app. I use the blog's title to generate all of the OG images.

Amazing, thanks for the tips!

Quite cool, thanks for sharing. Affordable screenshot tools which we used to remove cookie popups include thum.io and screenshotapi.net

Pour of curiosity, have you also tried rephrasing sentences with chat gpt or the gpt-3 API? Does quillbot allow for bulk uploads?

Looking forward to a follow up article how much traction you got!

Thank you, I will definitely check those out!

I think Quillbot uses GPT3. They do allow for bulk upload, but it caps at about 200 sentences per upload...

Nice work JP! Super insightful.

Thank you, Jelle!

Thanks @jpdavidpeters, very insightful! It's cool to see how you can integrate different tools.

Regarding unwanted cookies, I agree with @kndb, a more sophisticated web scraper might be able to get the job done :)

Thank you, Matteo. I will explore some other scrapers and report back in an update!

Thanks for sharing, I really liked the way you accomplished this.

Thank you so much for the kind words!

This has really helped me with my SEO, so thanks for posting it! Early results look promising. Do you have any update on how things are going with this project?

Glad to hear! I will likely do an update post soon, but it has gone really well. The PE pages are working better than the acquisition pages, so I'm happy I did both.

This project is current generating 5-figure traffic for BitsForDigits every month. I have not touched it since posting.

What's your project, then I can have a look. :)

Very impressive! Let's hope my traffic gets there, too! My project is called VCbacked dot co. I basically built individually pages for startups that raised VC at some point and provided data such the founders names, funding amount, industry etc.

I shared this with my SEO who is a writer first & foremost, and not incredibly systems-oriented, and I think she is disintegrating 😂

This is an interesting approach we weren't familiar with, so I'll definitely be waiting to hear some of your results. Followed. Can I ask what this cost you, in terms of tools used?

I think Crunchbase Pro is 49$/m, Quillbot 20$/m, Powerimporter 20$/m, Hexomatic was a lifetime deal, but probably cheap.

Hmmm not bad at all. Thanks a ton for answering!!

Thanks for the guide. Props to you for trying this on your actual business website. I wouldn't have the balls too!

I had a different idea of what programmatic SEO is (i.e. you reference NomadList which just changes the URLs/page content based on the filters) , but this works as well. I'd be interested to see an update a few weeks from now!

Haha thank, Austin! I'll report back how it went. ;)

If Google detects AI content (which they probably will because who can publish 700 articles in a day), they'll just ban you from the index. And you're donezo.

They don't ban it if it is helpful.

But it is not AI generated content. 😄 I only used a bit of AI to rephrase the descriptions.

Great guide! Excited to see the resulting bump in traffic :D And stellar tips for website screenshots and open graph images by @nicocerdeira

Looking forward to seeking that, too!

This is really cool, @jpdavidpeters! Thanks for sharing.

I will definitely try to apply this to my blog for my website. Always amazing to come up with new, cool means for SEO.

For "Things to improve", I think you could try to combine a web scraper that clicks through a cookiebot with an API for taking screenshots of the websites.

Will definitely check out @nicocerdeira's video too.

Thank you - I am still fairly new to webscraping, I am sure there are smart ways to do it! ;)

ok

Very insightful.

Thanks so much for the behind-the-scenes look. Continued success to you.

I wouldn't even call these "blog posts", the title is a little misleading. What's more accurate would be "how I added 659 listings to my directory in a day".

I can't imagine doing this with blog posts, you would quickly get de-indexed by google for low quality content.

Still a cool strategy you used though!

Could you expand a little bit on why you think just because this is based on a directory it immediately is low quality content?

In my mind, if someone searches e.g. "private equity firms in the US" and finds my article with 551 PE firms in the United States... That might be exactly what the person was searching for.

What exactly needs to be added here manually to create value / fulfil search intent?

My bad, I should have clarified. I don't think this is low quality content, I think this is perfect for your use case

I was referring to blog posts specifically, which I don't think yours would fall under that definition.

By blog post, I'm referring to longer form content that delves deeper into a subject.

Fair enough. I don't really think the "format" that is created does not matter quite as much as serving search intent, but agreed - you could not do this with an in-depth articles that explains a single topic in 3,000 words.

Insane

Thanks for sharing! I am interested to see how effective this is over the new few months.

I think it's a good tool to make content much quicker but it also need correction and time for it. However, it's much faster, I'm sure. Thanks for the information.

Its not working facing issues

Interesting, I has similar concerns with regards to possible ban. But out of curiosity, do you have a link to a sample article for one of the 659 blog posts that you created? Trying to see what kind of quality it has.

Sure, here you go: https://www.bitsfordigits.com/acquisitions/automotive-industry-acquisitions

For the Quillbot step, did you manually copy and paste each paragraph?

You can copy past about 200 lines in one go, so it is not too bad

Ah right. Thank you!

Super cool - I kinda of want to automate the whole thing, any ideas how you might be able to do that?

Let me know when you find a way haha

I think this is actually something I might be able to handle in my product (patterns.app)... going to take a stab at replicating later this week and can share a template when done!

Thank you for writing such informative and engaging content. Your insights and perspectives are always enlightening, and I appreciate the time and effort you put into creating valuable content for the readers. Keep up the great work!

This is amazing! The company I work at (https://getmxu.com) just did something similar. We have a library of ~600 training videos in the live production space and we utilized a static site generator (jekell) to turn the videos and their related metadata / text content into 'blog posts' / SEO material. The approach can be super useful and quick to accomplish in lieu of manually writing hundreds of content snippets.

Nice work thanks for sharing ! I am pretty new to SEO but it indeed seems to be more viable than GPT-3 generated articles

Hey being an SEO I have 2 concerns here-

1 - How you're going to make sure google will index these pages? And if I forcefully index these pages then how google is going to rank such thin and duplicate pages?

2 - How are you going to deal with internal duplicate content issue?

You could create your dynamic Open Graph Images using Python, quite easy to do (and you don't have to pay for a service like Bannerbear ;-)).

Thank you for sharing this with us. Very valuable information.

We are building Flezr NoCode builder that can do programmatic SEO automatically from Google Sheets data and Supabase data (we are adding more data sources too)

I recommend urlbox.io for screenshots (created by a fellow IndieHacker). The software handles cookie notice like a charm.

Looks pretty pricy - might be a better fit for folks taking A LOT of screenshots ;)

Or simply Python (Selenium package) in headless mode. It's a few lines of code. You can run the script either locally or via a Kaggle notebook or a Google Colab notebook (or a Replit Repl).

They have a starter plan as well: Just getting started?

Try the Starter plan. $10/month. 1,000 requests/month. $0.01 per additional request.

Ah yeah, a bit hidden. Then I'll give it a spin.

Blog Post Seo Setup? https://sarkariprep.in/govt-jobs/

Thank you for sharing your working process with us. Have you seen any results, specifically an increase in traffic from Google?

Given that I only set up these blog posts a few days ago, not much yet. I am getting first clicks and impressions, but not all pages have been indexed yet. I will post an update in a month or two once I see real results. :)

Sounds good!

This original idea bob

Hey, I make a website 4 month ago but the visitor and earning is to low. I publish 120 posts and 11 pages but I cannot earn handsome amount.

So can you tell how can I increase the traffic of this <a href="https://itspackages.pk/">all about packages</a> website naturally to earn money.

Waiting for your response.

Thanks.

This comment has been voted down. Click to show.

This comment was deleted 2 years ago.